Understanding Event-Driven Scraping Architecture

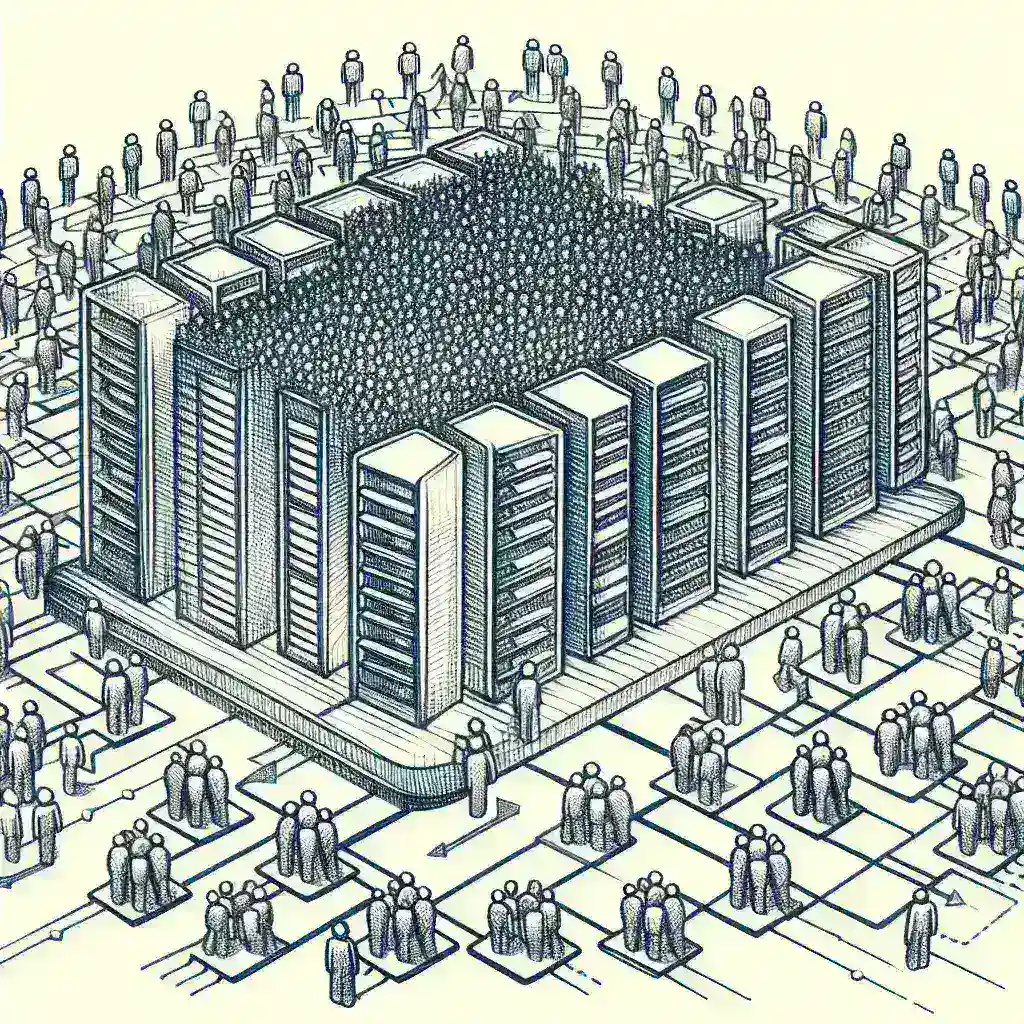

In today’s data-driven landscape, traditional scraping methods often fall short when dealing with large-scale operations. Event-driven scraping represents a paradigm shift from sequential, blocking operations to asynchronous, reactive systems that can handle thousands of concurrent requests efficiently. This architectural approach leverages events as triggers for scraping activities, creating a more responsive and scalable solution.

The fundamental principle behind event-driven scraping lies in decoupling the initiation of scraping tasks from their execution. Instead of running scrapers on fixed schedules or in linear sequences, the system responds to specific events such as data updates, user requests, or external triggers. This approach significantly improves resource utilization and reduces latency.

Core Components of a Scalable Event-Driven Scraper

Message Queue System

The backbone of any event-driven scraper is a robust message queue system. Message queues serve as the communication layer between different components, ensuring reliable delivery of scraping tasks and maintaining system resilience. Popular choices include Apache Kafka, RabbitMQ, and Amazon SQS, each offering unique advantages for different use cases.

Apache Kafka excels in high-throughput scenarios where data streaming is crucial, while RabbitMQ provides excellent flexibility for complex routing patterns. Amazon SQS offers seamless cloud integration with minimal maintenance overhead. The choice depends on your specific requirements regarding throughput, latency, and infrastructure preferences.

Event Producers and Consumers

Event producers generate scraping tasks based on various triggers such as scheduled intervals, API calls, or external data changes. These producers publish events to the message queue, including essential metadata like target URLs, scraping parameters, and priority levels.

Event consumers, on the other hand, subscribe to these events and execute the actual scraping operations. Multiple consumers can process events concurrently, enabling horizontal scaling based on workload demands. This separation allows for independent scaling of event generation and processing capabilities.

Data Storage and Caching Layer

A well-designed storage layer is crucial for maintaining scraped data integrity and enabling fast retrieval. Implementing a combination of databases optimized for different access patterns enhances overall system performance. Consider using:

- Time-series databases for temporal data tracking

- Document stores for flexible schema requirements

- Relational databases for structured data with complex relationships

- In-memory caches for frequently accessed information

Implementation Strategy and Best Practices

Designing for Resilience

Building resilience into your event-driven scraper requires implementing comprehensive error handling and retry mechanisms. Circuit breaker patterns prevent cascading failures by temporarily disabling problematic endpoints, while exponential backoff strategies help manage rate limiting and temporary service unavailability.

Dead letter queues serve as safety nets for events that cannot be processed successfully after multiple attempts. These queues enable manual inspection and reprocessing of failed tasks without losing valuable data or disrupting the main processing flow.

Rate Limiting and Politeness

Responsible scraping practices are essential for maintaining good relationships with target websites and avoiding legal issues. Implement adaptive rate limiting that adjusts request frequency based on server response times and error rates. This approach helps maintain optimal performance while respecting target site limitations.

Consider implementing jitter in your request timing to avoid creating predictable traffic patterns that might trigger anti-bot measures. Random delays between requests, combined with rotating user agents and IP addresses, help maintain scraping effectiveness over time.

Monitoring and Observability

Comprehensive monitoring is vital for maintaining a healthy event-driven scraping system. Implement metrics collection for key performance indicators such as:

- Event processing throughput and latency

- Success and failure rates for scraping operations

- Queue depth and consumer lag measurements

- Resource utilization across different system components

Real-time alerting based on these metrics enables proactive issue resolution before they impact overall system performance. Consider using tools like Prometheus, Grafana, and ELK stack for comprehensive observability.

Scaling Strategies and Performance Optimization

Horizontal Scaling Approaches

Event-driven architecture naturally supports horizontal scaling through the addition of more event consumers. Container orchestration platforms like Kubernetes enable automatic scaling based on queue depth or CPU utilization metrics. This elasticity ensures optimal resource allocation during varying workload conditions.

Implementing consumer groups allows for load balancing across multiple instances while maintaining event ordering when necessary. Partitioning strategies based on data characteristics or target domains can further optimize processing efficiency.

Caching and Data Deduplication

Intelligent caching strategies significantly reduce redundant scraping operations and improve overall system efficiency. Implement content-based caching that stores scraped data with appropriate TTL values based on data volatility characteristics.

Deduplication mechanisms prevent processing identical requests multiple times, reducing both computational overhead and the risk of overwhelming target websites. Hash-based fingerprinting of requests and responses enables efficient duplicate detection.

Dynamic Resource Allocation

Advanced scaling implementations incorporate predictive scaling based on historical patterns and external factors. Machine learning models can forecast scraping demand and automatically adjust resource allocation to maintain optimal performance levels.

Consider implementing priority queues that process high-priority events first while ensuring fair resource allocation for lower-priority tasks. This approach maximizes the value extracted from available computational resources.

Security and Compliance Considerations

Data Protection and Privacy

Modern scraping operations must comply with various data protection regulations such as GDPR, CCPA, and industry-specific requirements. Implement data anonymization and pseudonymization techniques where appropriate, and maintain detailed audit trails for compliance reporting.

Secure data transmission using encryption protocols and implement access controls that limit data exposure to authorized personnel only. Regular security audits help identify and address potential vulnerabilities before they can be exploited.

Legal and Ethical Scraping Practices

Respect robots.txt files and terms of service for target websites, implementing automatic compliance checking as part of your scraping workflow. Maintain detailed logs of scraping activities for legal documentation and dispute resolution purposes.

Consider implementing consent management systems for scenarios involving personal data collection, ensuring transparency and user control over data usage.

Advanced Features and Future Enhancements

Machine Learning Integration

Incorporating machine learning capabilities enhances scraper intelligence and adaptability. Implement anomaly detection systems that identify unusual patterns in scraped data or system behavior, enabling proactive issue resolution.

Natural language processing techniques can improve data extraction accuracy from unstructured content, while computer vision algorithms enable effective handling of image-based information.

Real-time Data Processing

Stream processing frameworks like Apache Spark or Apache Flink enable real-time analysis of scraped data as it flows through the system. This capability supports use cases requiring immediate insights or rapid response to data changes.

Implement change detection mechanisms that trigger downstream processes only when meaningful data modifications occur, reducing unnecessary computational overhead.

Deployment and Maintenance Strategies

Infrastructure as Code

Utilize infrastructure as code tools like Terraform or AWS CloudFormation to ensure consistent and reproducible deployments across different environments. Version control for infrastructure configurations enables rollback capabilities and change tracking.

Implement blue-green deployment strategies that minimize downtime during system updates while providing immediate rollback options if issues arise during deployment.

Performance Tuning and Optimization

Regular performance analysis helps identify bottlenecks and optimization opportunities within your event-driven scraping system. Profile different components to understand resource consumption patterns and optimize accordingly.

Consider implementing adaptive algorithms that automatically tune system parameters based on observed performance characteristics and changing workload patterns.

Conclusion

Building a scalable event-driven scraper requires careful consideration of architecture patterns, implementation strategies, and operational best practices. The event-driven approach offers significant advantages in terms of scalability, resilience, and resource efficiency compared to traditional scraping methods.

Success depends on implementing robust monitoring systems, maintaining compliance with legal and ethical standards, and continuously optimizing performance based on real-world usage patterns. By following the strategies outlined in this guide, organizations can build scraping systems that reliably extract valuable data at scale while maintaining operational excellence.

The future of web scraping lies in intelligent, adaptive systems that can respond dynamically to changing conditions while respecting the rights and resources of data providers. Event-driven architecture provides the foundation for building such systems, enabling organizations to harness the full potential of web-based data sources in an efficient and responsible manner.